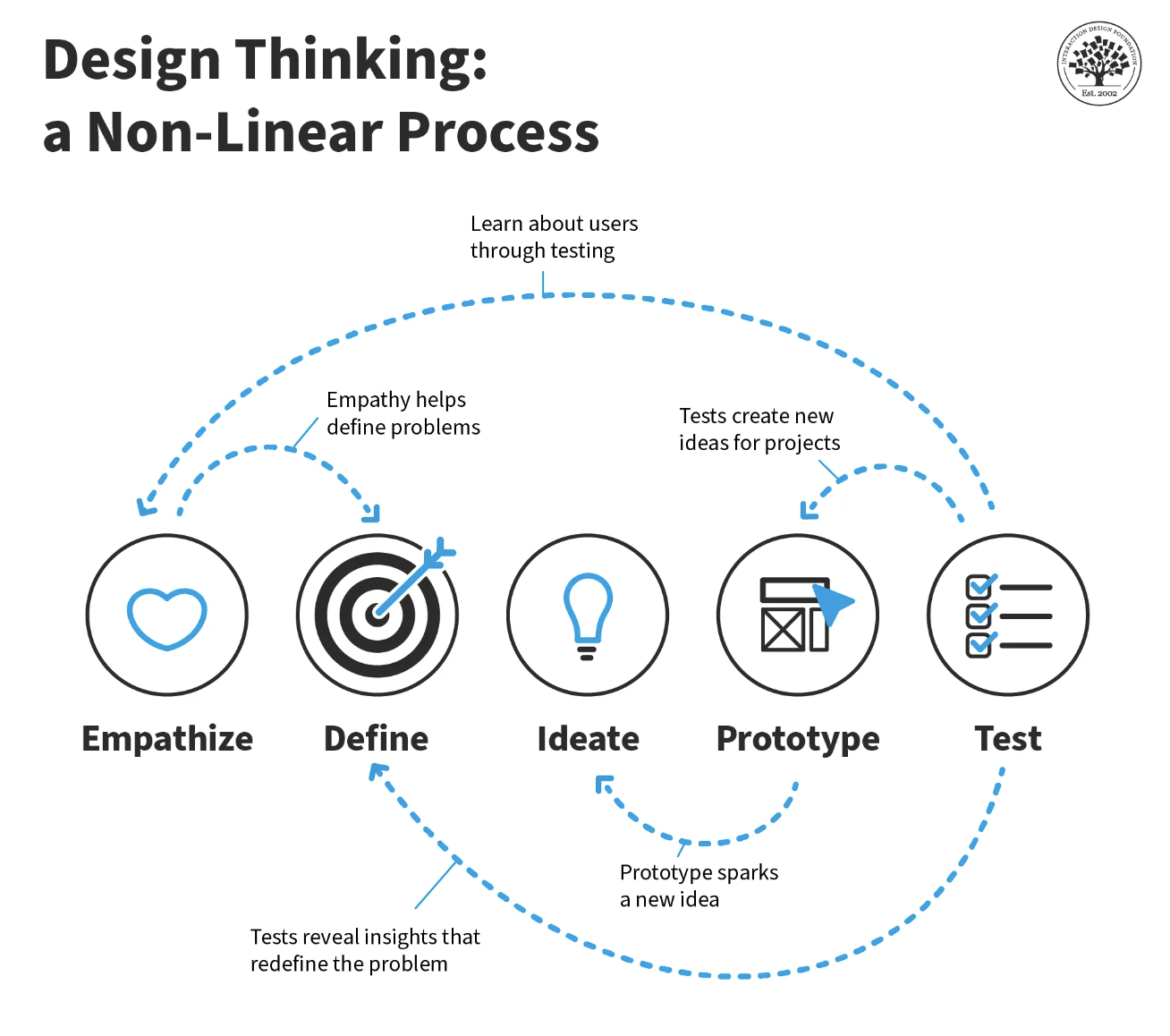

Design processes involve exploration, iteration, and movement across interconnected stages such as persona creation, problem framing, solution ideation, and prototyping. However, time and resource constraints often hinder designers from exploring broadly, collecting feedback, and revisiting earlier assumptions—making it difficult to uphold core design principles in practice. To better understand these challenges, we conducted a formative study with 15 participants—comprised of UX practitioners, students, and instructors. Based on the findings, we developed StoryEnsemble, a tool that integrates AI into a node-link interface and leverages forward and backward propagation to support dynamic exploration and iteration across the design process. A user study with 10 participants showed that StoryEnsemble enables rapid, multi-directional iteration and flexible navigation across design stages. This work advances our understanding of how AI can foster more iterative design practices by introducing novel interactions that make exploration and iteration more fluid, accessible, and engaging.

StoryEnsemble is an interactive system designed to help users rapidly explore and flexibly iterate on personas, problem statements, solutions, and storyboards.

No. You can use it only when needed or not at all entirely.

StoryEnsemble offers four types of nodes, each corresponding to a stage of the design thinking process: (1) persona [Empathize]; (2) problem [Define]; (3) solution [Ideate]; and (4) storyboard [Prototype].

The system offers nodes for different ideas: (C) persona nodes, (D) problem nodes, (E) solution nodes, and (F) a storyboard node. (G) Edges map the relationships and dependencies between different ideas, showing how ideas exert influence on dependent ideas throughout the system.

‘Start brainstorming’ button on the upper left corner generated chains of ideas shown above. Alternatively, you can click on the ‘Add empty node’ button to add individual empty nodes.

See it in action.

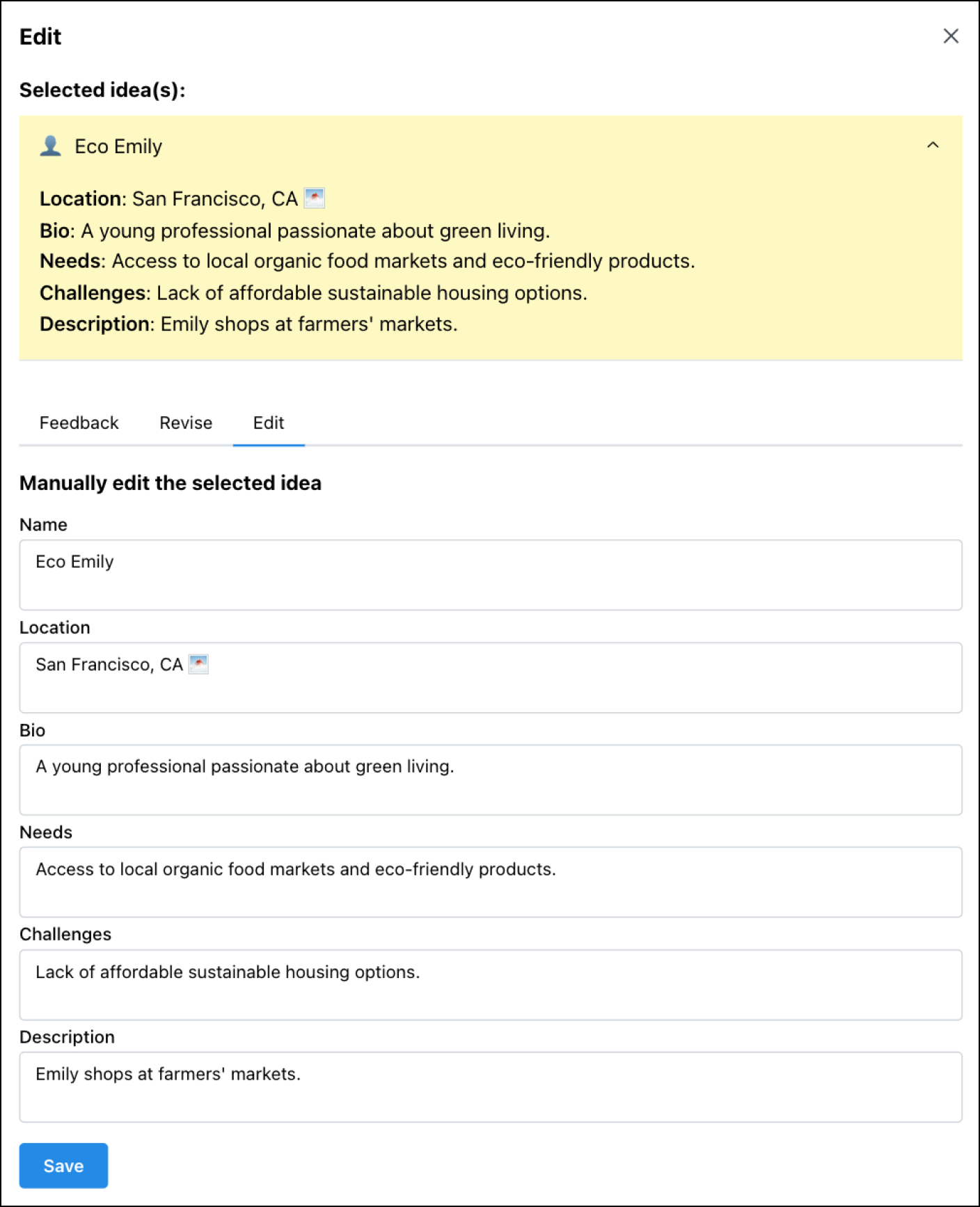

The three nodes—Persona, Problem, and Solution Nodes—are similar in its structure, but they differ in the types of attributes. For example, the persona node helps users define target users through attributes like name, location, needs, and challenges, as shown below.

Persona node: An (A) illustrative image, (B) name and (C) attributes which represent a persona. Each node has (D) a toolbar for editing and creating related nodes.

By clicking the “Edit manually” button in the toolbar, users can manually edit the contents of a node using an interface with text fields, as shown in the above figure, with a similar interface applied across all node types.

See it in action.

These attributes can be filled in manually (“Edit manually”) or generated using AI to assist with brainstorming user profiles.

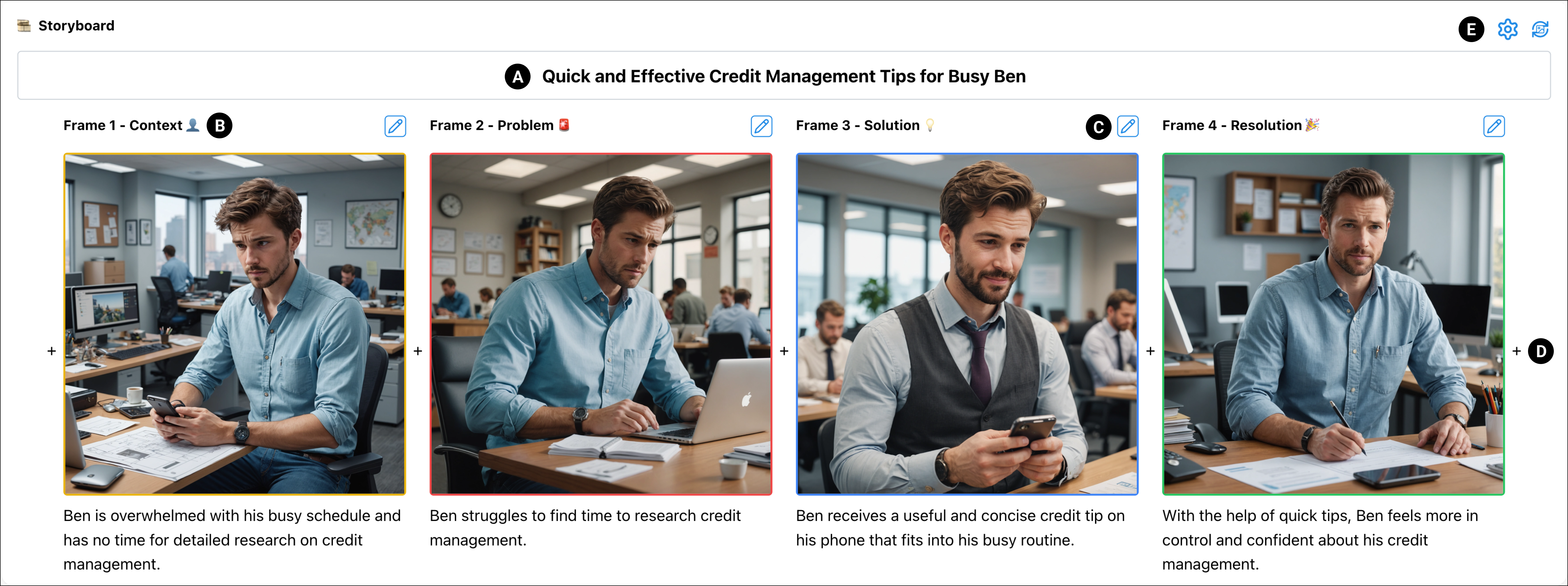

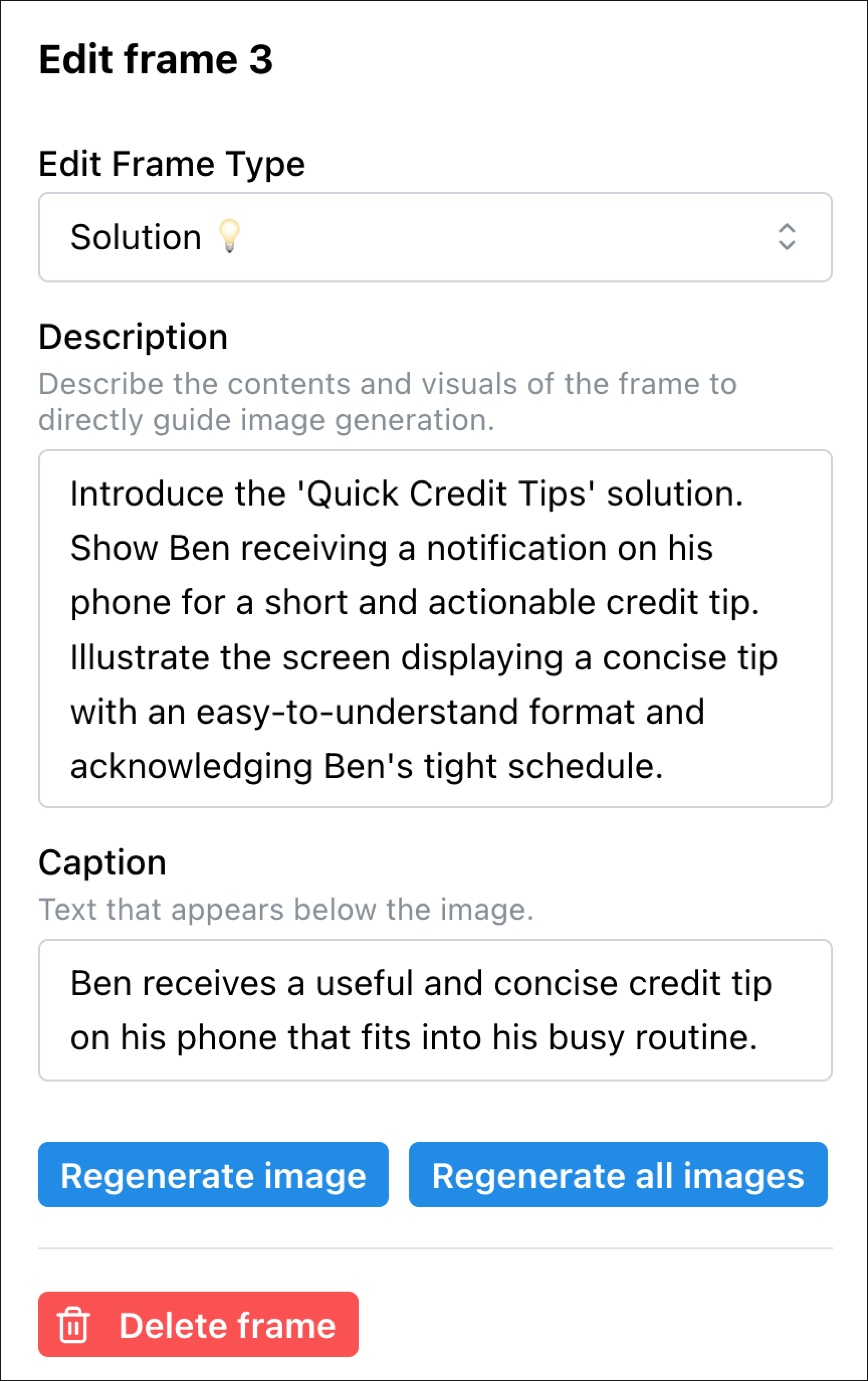

Each storyboard includes (A) a title and multiple frames, where (B) each frame contains a specific type (e.g., Context, Problem, Solution, Resolution), an image, and a caption. Users can (C) open a panel to edit an existing frame or (D) add new frames to expand the narrative. (E) Settings allow users to customize the image style (e.g., digital-art, line-art, comic-book) and regenerate all storyboard images.

A pop up panel appears when users click on the edit icon (see Fig. C in the above figure). Using the panel, users can edit a frame’s type, description, and caption to regenerate the frame image or all images or remove the frame entirely.

After users select ‘Regenerate image,’ the text in the description box is used as prompt to instruct what image gets generated for the frame.

See it in action.

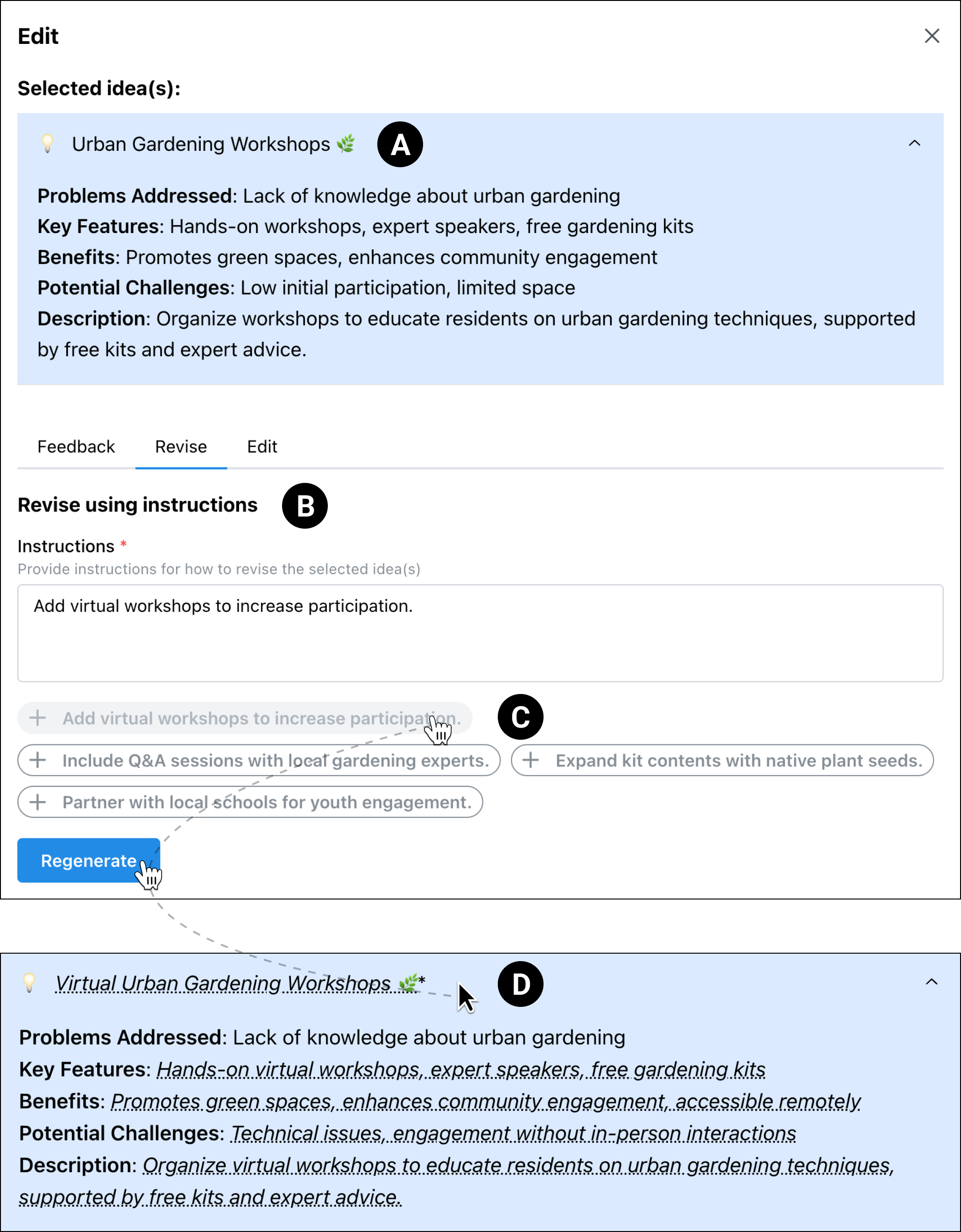

This feature allows users to update (A) idea content by providing (B) natural language prompts. (C) AI suggests instructions for iteration, and (D) revised content is automatically underlined for clarity.

This feature supports brainstorming. When you have missing values in any node, you can ask AI to fill in these values.

See it in action.

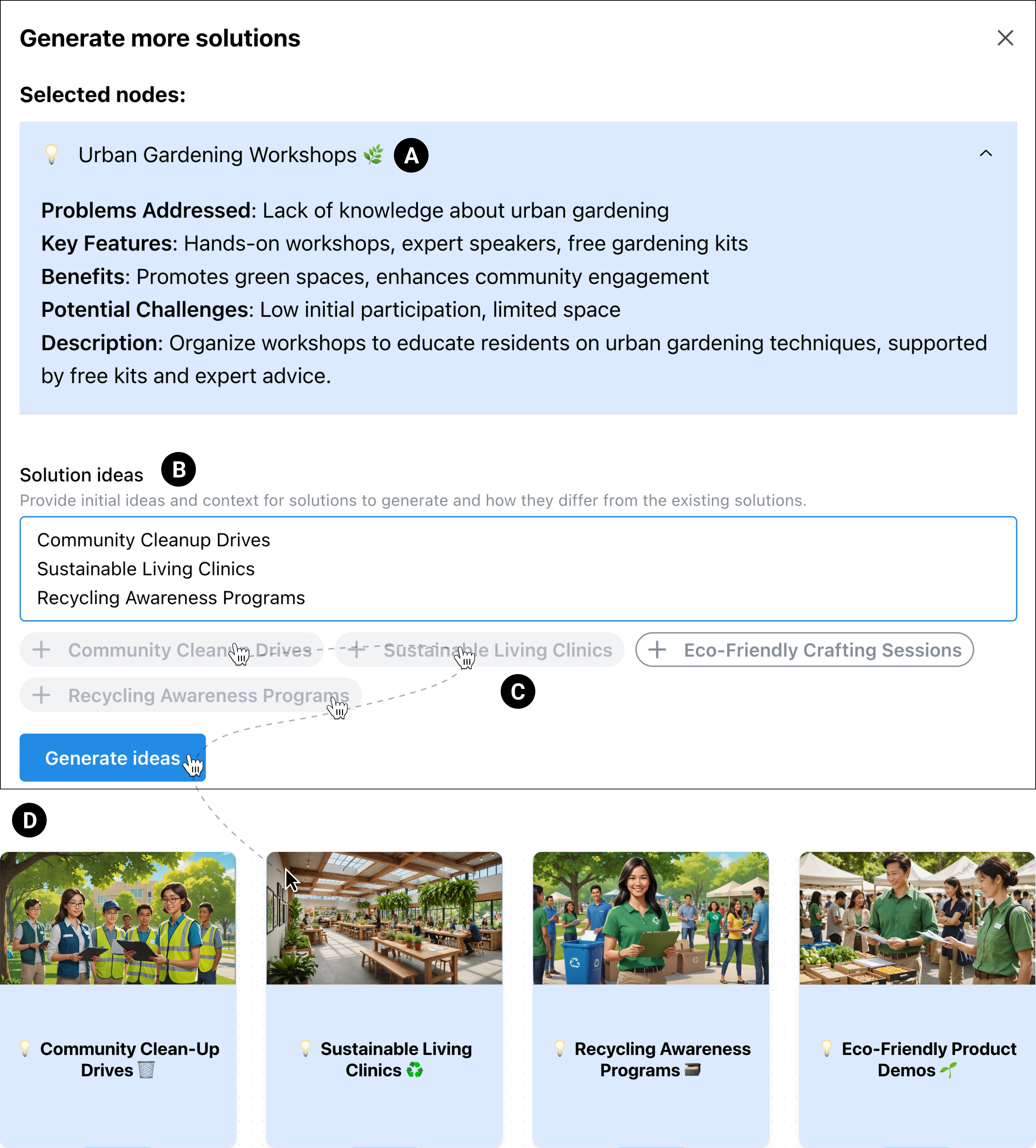

Users can build on (A) a selected idea by (B) entering their own suggestions or (C) utilizing AI-generated recommendations. The system then generates (D) additional ideas to explore further possibilities.

See it in action.

You can get AI-generated feedback on any node.

See it in action.

AI suggestions get generated in the revise tab of every node or when users try to generate more.

See it in action.

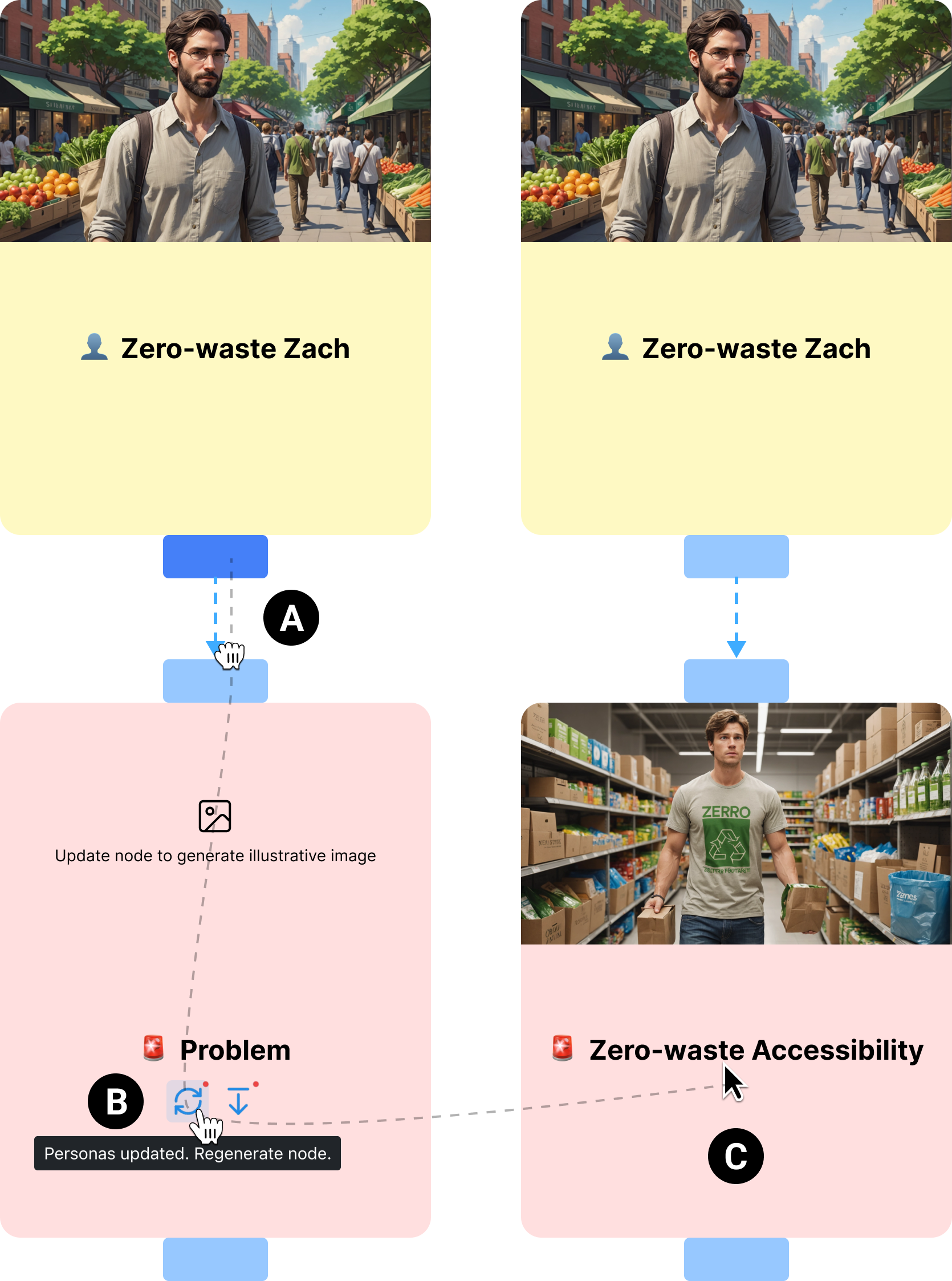

Users can cascade changes from an earlier design stage to a later stage (e.g., persona to problem statement) by (A) connecting the nodes with an edge or updating a connected node (Zero-waste Zach) and (B) refreshing the later artifact to generate (C) an updated artifact.

See it in action.

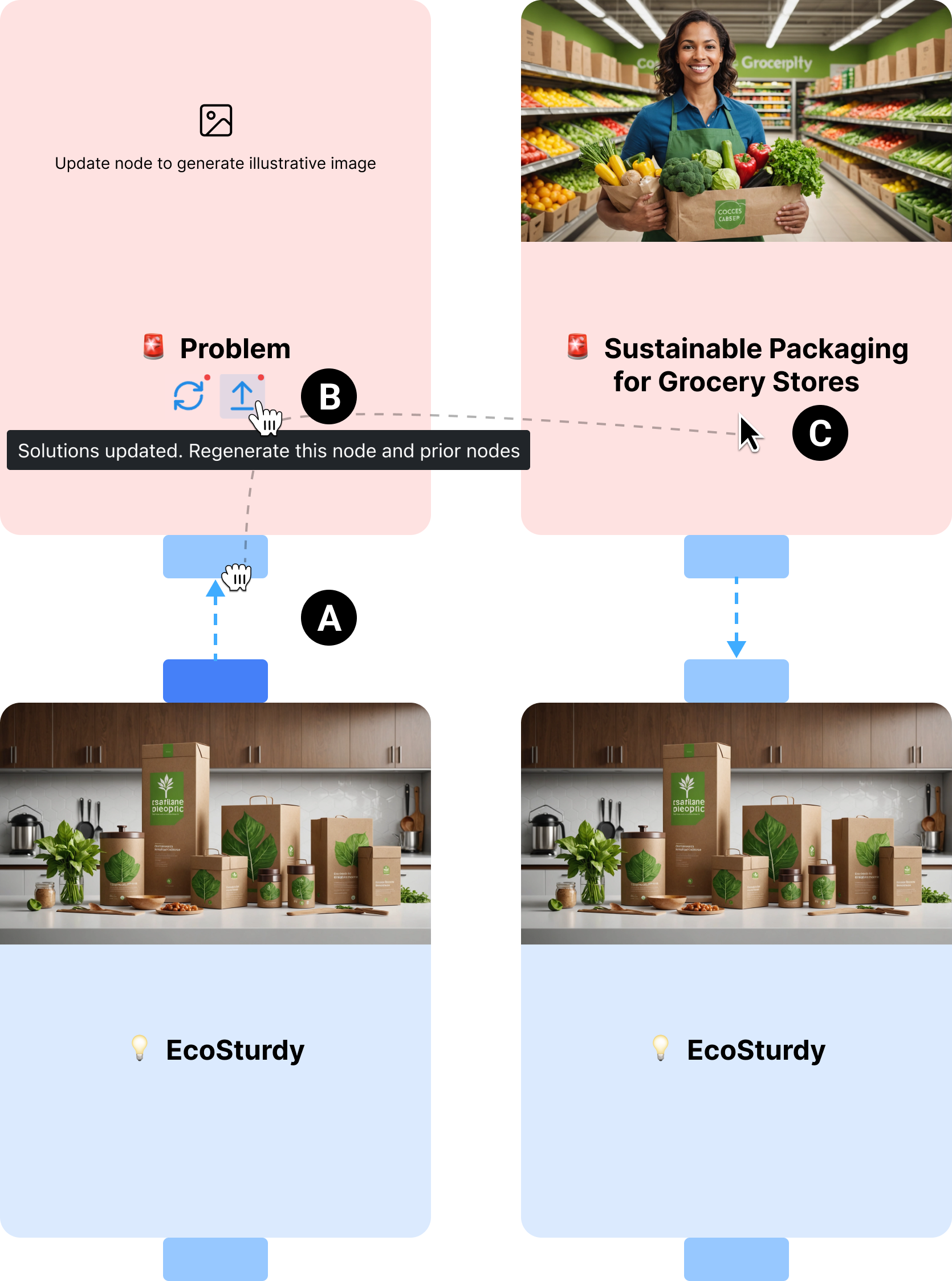

Users can cascade changes from a later design stage to an earlier stage (e.g., extract problem statement from a solution).

By (A) connecting a solution to a problem, (B) a notification is displayed to refresh the problem which (C) updates the problem according to the solution.

See it in action below.

You can download canvas & storyboard iamges. We ask you to upload it in the assigne GDrive folder at the end of the prototype session.

See it in action.

This simple workflow below shows uploading images to each frame and also being able to use AI to generate images and set their style.

The relatively more complex workflow below shows a number of techniques.

This workflow shows how you can steer solution ideas from a problem node and also steer the generation of images in a storyboard.

This workflow shows how you can brainstorm feature ideas with AI.

See StoryEnsemble in action in this Video Demo.